Dy-Sigma: A Generalized Accelerated Convergence Strategy for Diffusion Models

Abstract

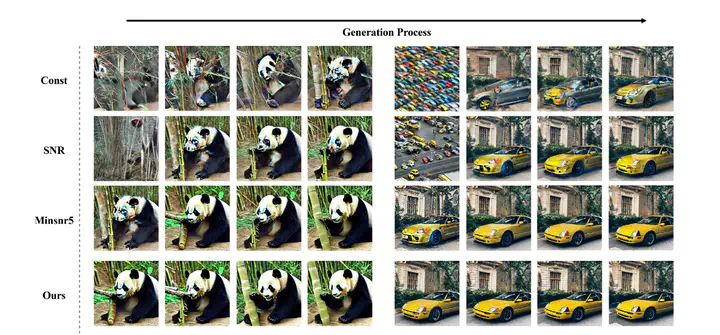

Recently, text-guided diffusion models have demonstrated outstanding performance in image generation, opening up new possibilities for specific tasks in various domains. Consequently, there is an urgent demand for training diffusion models tailored to specific tasks. The prolonged training duration and sluggish convergence of diffusion models present a formidable challenge when expediting the convergence process. Furthermore, there is still room for improvement in existing loss weight strategies. Firstly, the predefined loss weight strategy based on SNR (signal-to-noise ratio) transforms the diffusion process into a multi-objective optimization problem. However, reaching Pareto optimality under this strategy requires considerable time.In contrast, the unconstrained optimization weight strategy can achieve lower objective values, but the fluctuating loss weights for each task result in unstable changes, leading to low training efficiency. In this paper, we propose a new loss weight strategy, Dy-Sigma, that combines the advantages of predefined and learnable loss weights, effectively balancing the gradient conflicts in multi-objective optimization. Experimental results demonstrate that our strategy significantly improves convergence speed, being \textbf{3.7 times} faster than the Const strategy.